The 5 Core Qualities

As we discuss concepts (for the sake of this conversation, consider a "concept" as an idea, software project, or some tangible product), it becomes important to define the potential of success for a concept. I argue that there are a 5 core qualities which can be used as predictors for the success of a concept. The classification of these core qualities is not perfect and there is overlap between some of the qualities. However, it is a good starting point and will allow concepts to be compared along side each other. This is an important theme moving forward. Recall, a good deal of the content of this blog is theoretical in nature; consequently there are often times where no actual results exist in which to gauge success. It is therefore imperative that we have a means to estimate how successful an idea may become. In particular, this estimator can be used to drive which projects should be afforded more research and which projects should be tabled.I'd like to introduce the core qualities here, but I hope to expand on them and construct more precise definitions at some point.

Value

Does the concept have inherent value or can the concept bring some value? Value is perhaps the most nebulous of the core qualities. For example, it is difficult to quantify the value obtained from a piece of software and even more challenging to compare that value against value derived from a tangible good. Nevertheless, we must strive to quantify the value so that we can predict success. Most people will probably see "value" as the most important core quality, though I suspect that it is the hardest core quality to assess.Accessibility

Is the concept accessible? Consider cost and required resources in order to execute the concept.This core quality is assessed with respect to the average person, not the intended concept consumer; this approach leads to less biased comparisons across concepts. Consider a domain-specific assessment for like-minded concepts.

For example, if a concept describes a manufacturing process for piston rings which will not wear over time, what are the execution requirements? Almost certainly there is a need for precision manufacturing equipment and specialized raw materials. Accessibility for the average person, then, would be low. Conversely, a concept describing a new way to tie a shoe would have a very high accessibility score because nearly everyone in the world has the equipment and ability to execute the concept.

Usability

How easy is it to execute the concept? Here, the definition of "usability" changes from concept to concept. For software, usability might describe the user interface. For a car, usability may describe steering, acceleration and braking. Some people may think of usability as a means to describe simpleness or intuitiveness.Classic example: if the software interface is not intuitive and the instruction manual is written in Klingon, then the concept scores low with respect to usability because most people cannot easily use the software.

As a note, when I am involved with designing software, I often apply the "mother rule" to common or complicated functionality. I ask whether or not my mother could use the functionality without needing someone to explain it to her. This turns out to be a good litmus test for other developers on my team - perhaps because other developers can relate to the situation. To wit, most developers have fixed their mothers computer, fielded calls about email attachments or configured a new computer.

Awareness

How are others made aware of the concept? It does not matter how awesome my concept is or how easy it is to implement or execute - if no one knows about my concept, it may as well not exist.Bringing awareness to a concept can sometimes be challenging. It is not my intent to discuss how to generate awareness; rather, my intent is to simply state that awareness of a concept is fundamental in predicting success. More to the point, having the ability to generate awareness is needed.

Reliability

Is the concept reliable? Another nebulous determinant, but still important. For a car manufacturer, the car needs to run well with little maintenance. For iPhone applications, the app needs to not crash frequently (or at all). How we measure reliability will differ between concepts, but we should be able to capture some value of reliability.Now What?

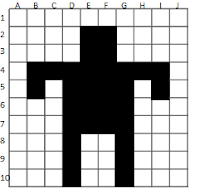

We need to identify a way to capture all the core qualities at once. How can we easily convey the potential of success for a concept? I think that a good visualization should be able to convey its message with no words (legends, keys, tips, etc.). Radar charts are our friend here. These charts allow us to render multivariate data in an easy-to-understand manner. At a glance, we can see how a concept's core qualities rank with respect to each other.If you are not familiar with a radar chart, I think it is easier to see an imperfect concept before an ideal (or perfect). Take a look at the chart below - hopefully you are able to infer that the concept lacks in reliability, is average with respect to value and awareness and scores well with usability and accessibility.

|

| Poor Reliability |

Comparing Concepts

Comparing concepts against each other is an art. We can look at a number of concepts simultaneously:

|

| Comparisons |

While somewhat helpful, this is not ideal. It is difficult to rank these concepts from best to worst (most-likely to succeed and least-likely to succeed). My brain cannot easily quantify and rank these concepts (with the notable exception of the ideal concept).

I have used various strategies with limited success when comparing concepts. From assigning "point values" and success coefficients to coloring the points to calculating volume, I have not seen anything that works better than intuition derived from some form of visual representation. And I think there is inherent value in gut feelings.

|

| Multiple Concepts |

Sometimes I am evaluating multiple concepts where the usability does not matter much - this happens often with software projects I write for myself (I simply do not care if anyone else can use the software other than me). I guess what I am trying to say (poorly) is that I need to evaluate groups of concepts with unique constraints on the set (ie: "usability does not matter" or "awareness is inconsequential"). Regardless of the constraints, if I know what I am looking for, it becomes easier to process the radar charts.

Takeaway

The main message to take away is that we need a way to quantify the potential for success of a concept. This is important because I will be talking about a wide variety of concepts and it is valuable to have a sense of which projects I should be pursuing. By defining and understanding the five core qualities, I can assign unbiased values to the five core qualities and represent the assignments as a radar chart. This permits me to be "roughly right" in the "resource assignment" phase of the concept. I simply will cannot afford to invest in the concepts which predict little chance of success.